Highlights

- First of its kind automated video generation product for the broadcast industry enabling hyperlocal weather videos for mobile, CTV, broadcast and web.

- Unveiled at NAB 2025 — Press coverage from

- The NAB debut generated a strong pipeline of new trial users.

- First AI-powered user features in the company, other groups looked to our project for their needs.

Brian’s roles

- Lead Product Designer and Generalist: I managed a two-person design team and, when resources shifted, seamlessly transitioned to a hands-on generalist role.

- Design Ownership: I was responsible for all design duties, from UX research to UI execution, while running frequent customer input sessions to maintain project quality and velocity.

- Product Management Collaboration: 3-in-a-box product/project management (along with PM and lead dev)

- Legacy and Innovation: Balanced legacy infrastructure with use of latest tech

- Prompt Engineering: Prompt engineering for LLM content creation, including a complete rewrite of a 2,200-word prompt to increase reliability and conserve token use.

What problem are we solving?

Meteorologists are under immense pressure to create timely, accurate, and hyper-local weather content for a growing number of platforms, including broadcast, web, and streaming services. The manual effort required to generate this content for each platform is a significant burden. ReelSphere was designed to solve this problem by automating the creation of scheduled, frequently published weather videos with live data, freeing up valuable time for “mets” to focus on forecasting and live on-air duties.

How did we know this is the right problem to solve?

While the strategic direction came from leadership based on their customer conversations, I owned the research and validation process that shaped how we’d execute on hyperlocal weather videos.

Through dozens of customer interviews over a year—from executive meetings to industry conferences—I identified the core constraints our customers faced. They were eager to create compelling content for streaming and CTV platforms but couldn’t add staff to handle the extra production workload. Our existing Prism product was a workhorse that automated basic video publishing, but it couldn’t customize content by location or add voiceover, limiting its usefulness for the hyperlocal, personalized content customers needed.

To validate the concept, we partnered with Smith and Geiger, a respected TV industry research firm, to test prototyped hyperlocal weather videos with potential viewers. The positive response data helped confirm the value for customers.

Initially, customers were skeptical AI being involved with their content, fearing hallucinations and viewer pushback. As AI voice synthesis improved dramatically during development, customer attitudes shifted. They became believers when they saw quality scripts and natural-sounding voiceover in our proofs of concept—some even wanted to use ReelSphere videos on linear TV where they struggled to find on-air talent.

This research process confirmed that automating hyperlocal video creation addressed a genuine customer need while opening new monetization opportunities that excited their advertising teams. Rather than simply retrofitting our existing Prism product, the insights from customer interviews shaped our decision to build ReelSphere as a dedicated experience designed specifically for this workflow.

How did we build the product right?

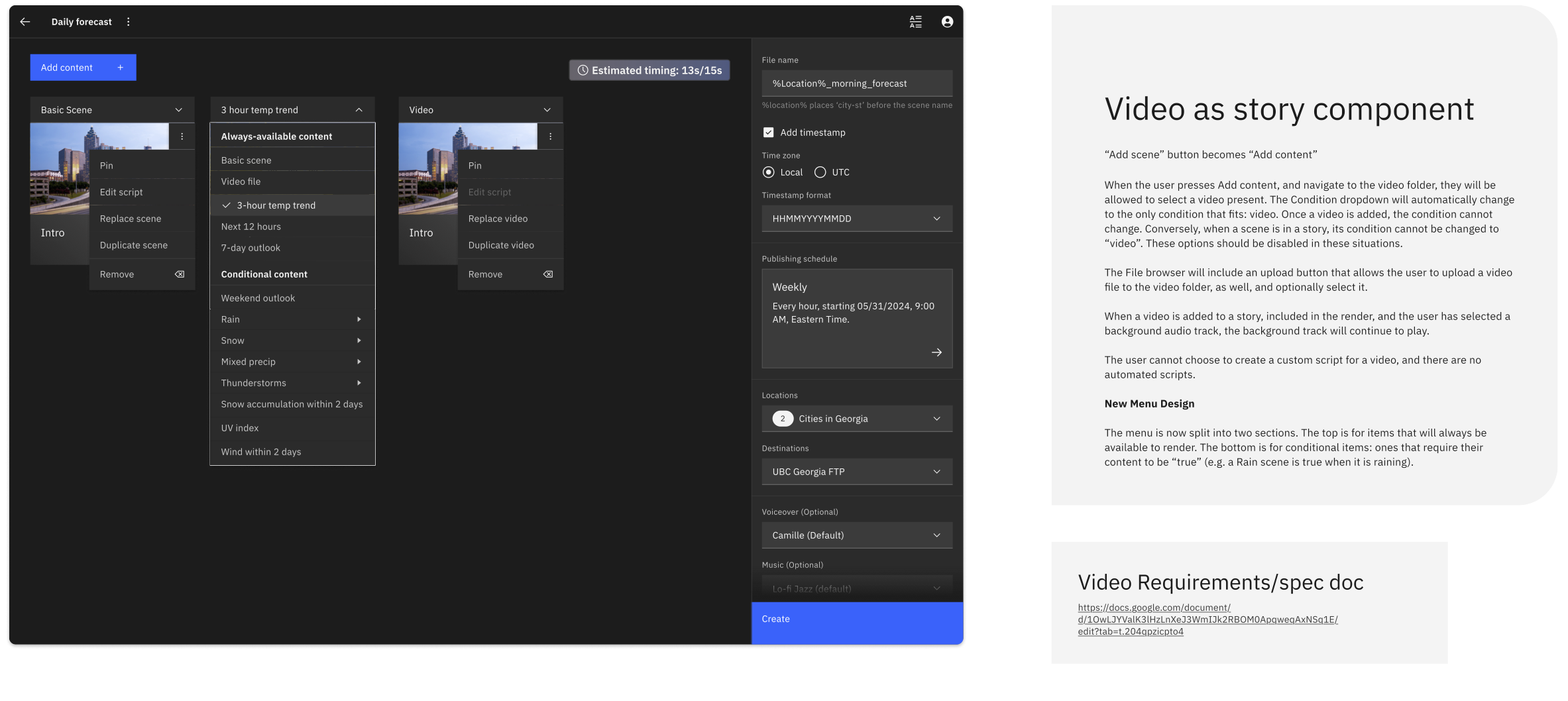

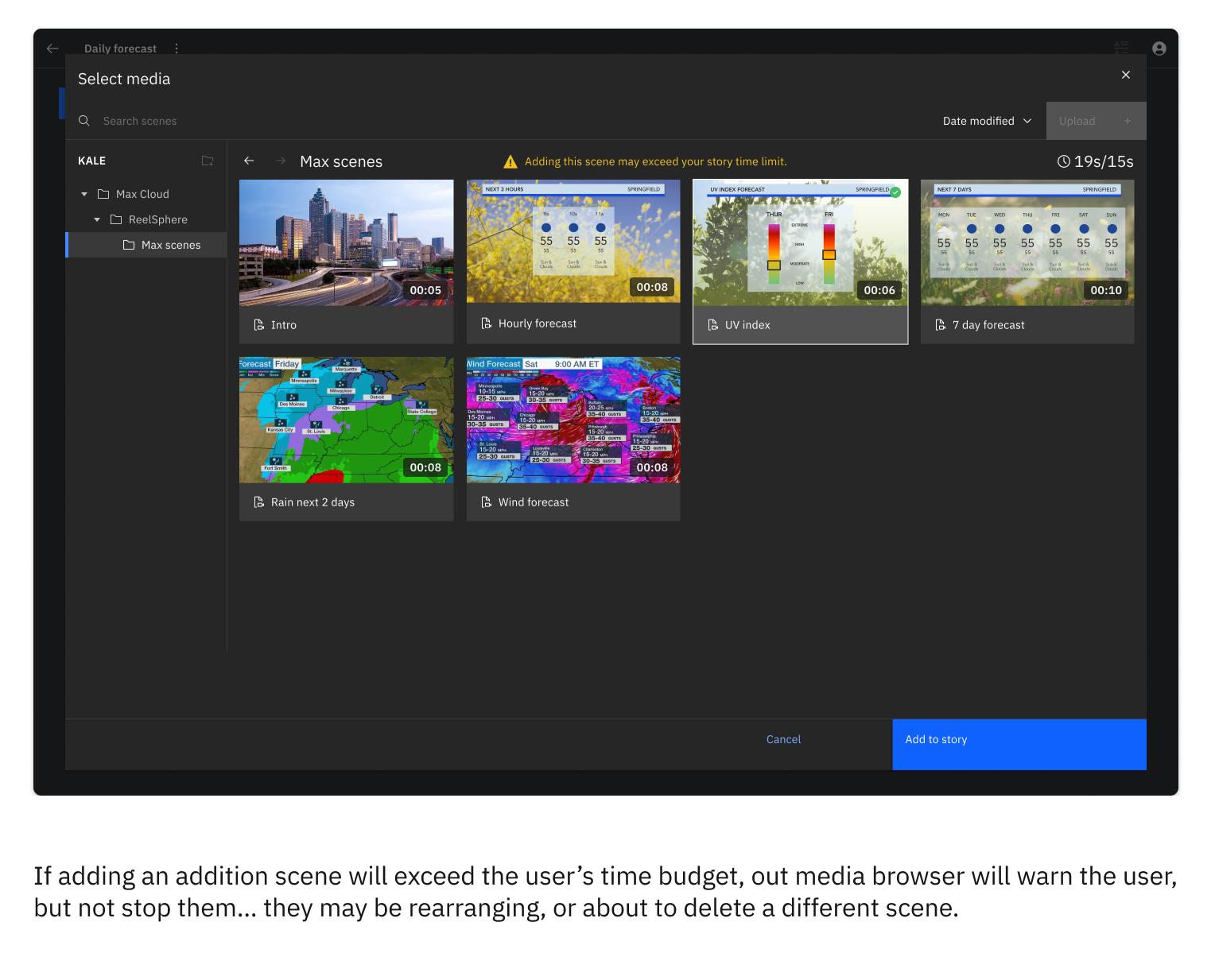

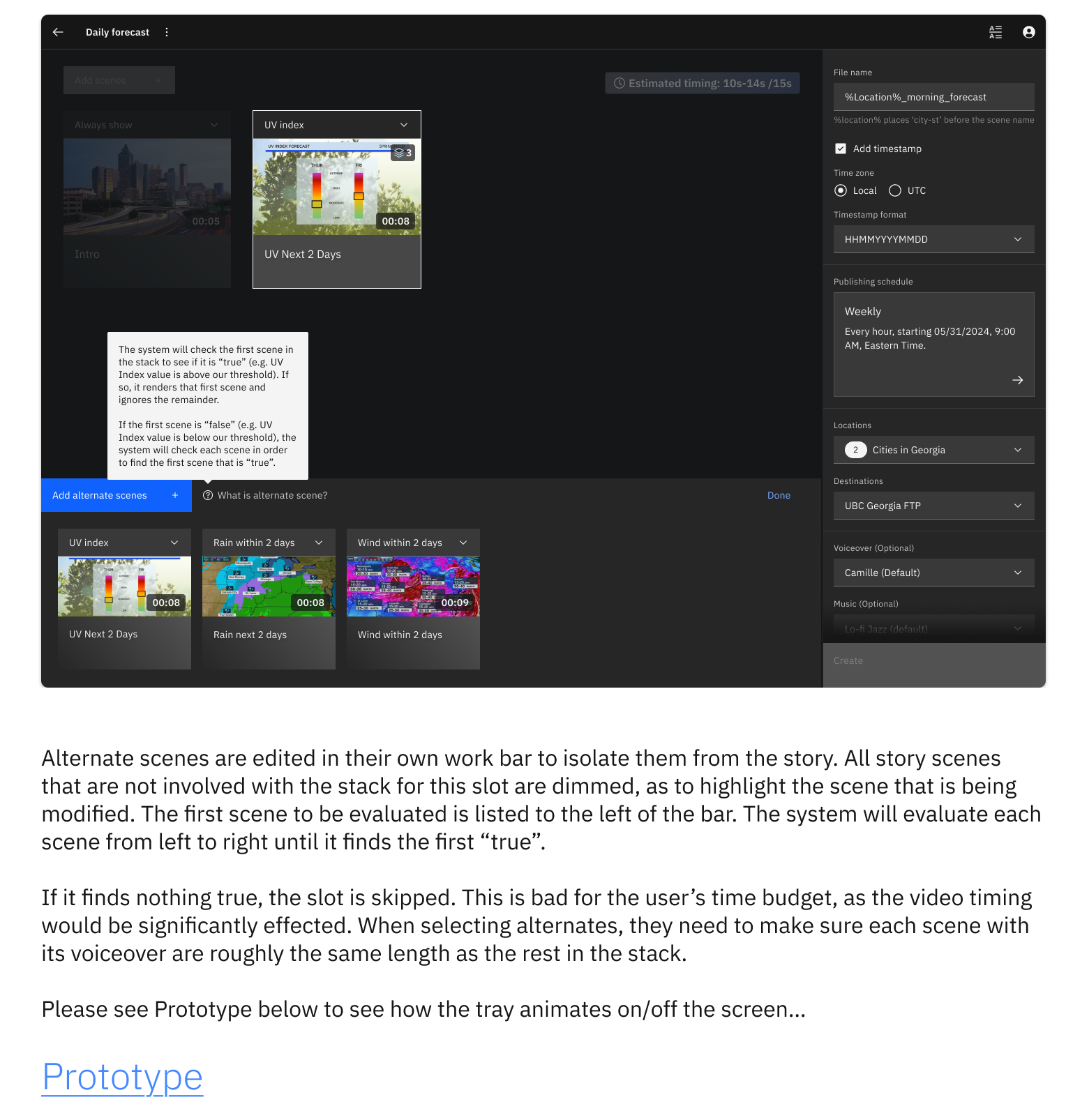

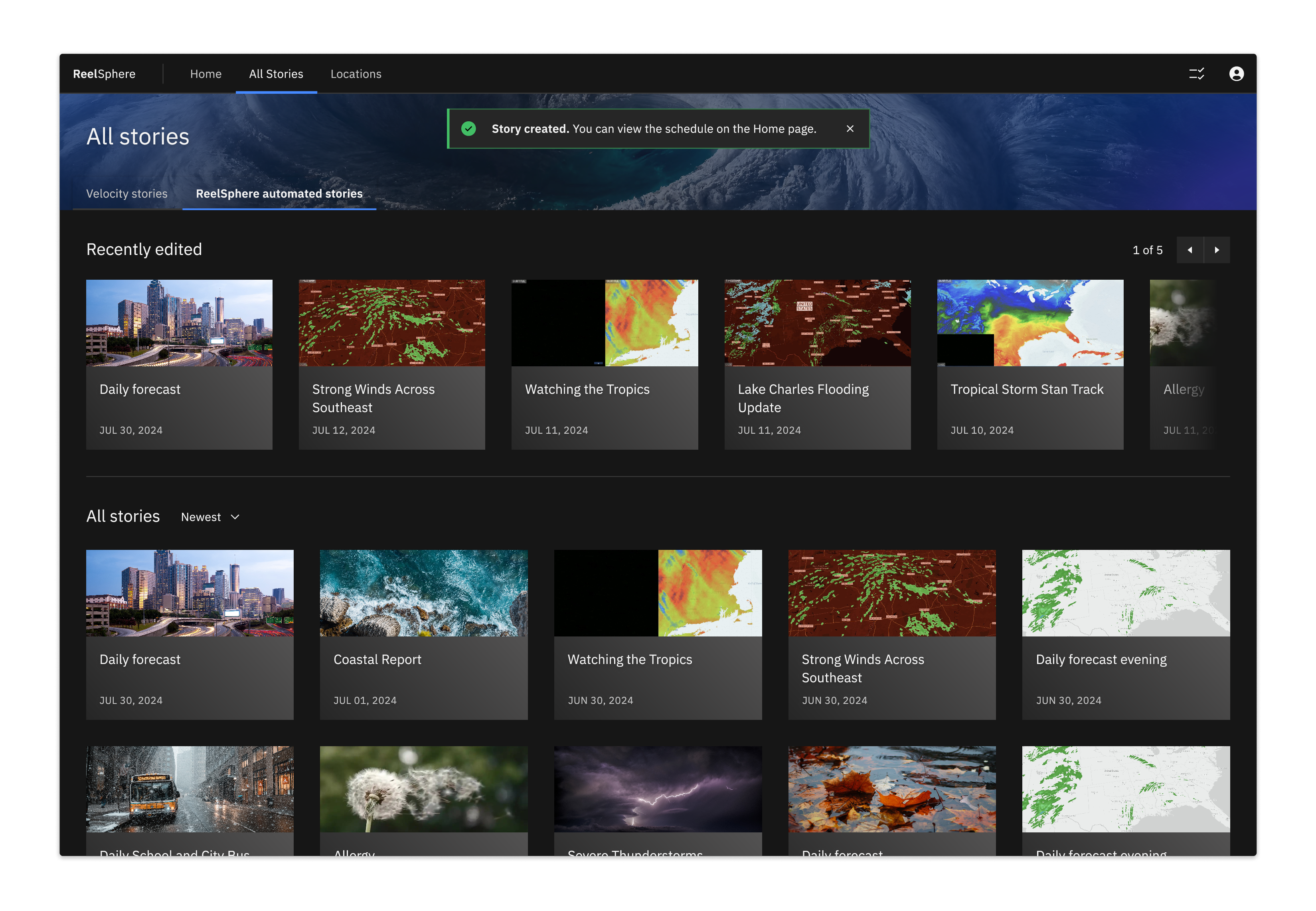

With the problem validated, version 1 focused on letting users create stories from an existing library of Max scenes and variables that are then scheduled to auto-publish. At publication time, ReelSphere dynamically chooses the appropriate scenes based on each location’s current weather data, updates the graphics, and adds a pre-written, descriptive text for each scene. These are the same top-quality insights used on TWC’s weather.com and The Weather Channel apps. The script can be manually augmented or completely overwritten by a meteorologist and then synthesized with a customizable AI voice.

The earliest builds of version 1.1, shown to great acclaim at the 2025 National Association of Broadcasters conference, were still a work in progress. While I collaborated with our internal AI/ML team who built the generative script backend, a key issue remained: the LLM-generated scripts were inconsistent. I was responsible for a complete rewrite of the script-generating prompt, slimming it down from over 2,200 words to around 1,800 and applying best-practices for LLM prompting. This overhaul enabled the system to reliably create LLM-written scripts for each scene with a tunable style and tone.

With these features set to reach customers soon, ReelSphere will empower meteorologists with a vital tool to serve the demands of modern media.